From Git Push to Production: Your Own Self-Hosted Platform

In this guide, you'll build your own Vercel-like platform on Kubernetes in ~30 minutes:

- You'll deploy an EKS cluster with kpack (auto-builds), cert-manager (TLS), external-dns (DNS), and nginx-ingress

- You'll configure automatic Git push → build → live HTTPS deployment (just like Vercel)

- You'll run any workload: web apps, APIs, databases, microservices, background jobs—any language

- You'll add security scanning, compliance controls, and observability for production

- You'll use Nova AI to debug, troubleshoot, and operate your platform

Perfect for building internal developer platforms, launching SaaS products, or meeting enterprise compliance requirements.

Introduction

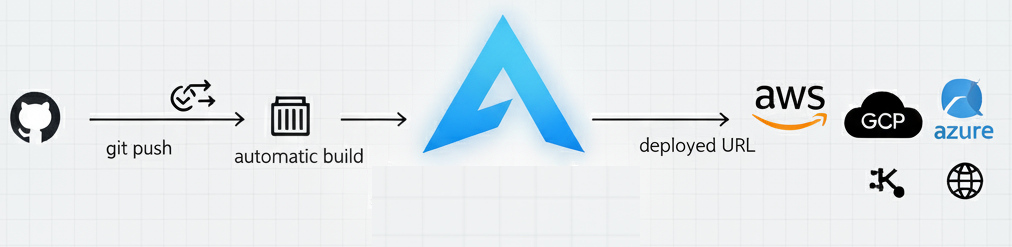

You want the simplicity of "push code, get a live URL"—the developer experience Vercel pioneered—but with full control over your deployment, infrastructure, and compliance. This guide shows you how to build that experience on your own AWS infrastructure using AstroPulse and open-source tools: kpack, cert-manager, external-dns, and nginx-ingress.

You'll build a production-grade platform that delivers Git-push deployments with automatic TLS certificates, preview URLs, and complete observability—all running on infrastructure you own and control. Unlike hosted PaaS platforms, you'll be building on Kubernetes with full deployment control. That means you can run any workload: microservices (with or without public endpoints), stateful databases, WebSockets, long-running background jobs, AI/ML model training and serving, or traditional web applications in any language. You get the simple developer experience with complete architectural control.

How operations work: The infrastructure industry is moving toward an agentic era—AI agents autonomously handling complex workflows (MCP, A2A, multi-agent orchestration). We're heading toward infrastructure that self-configures, self-heals, and self-optimizes. We're not there yet, but Nova AI brings you AI-assisted operations today with human-in-the-loop. Day 1 (this guide): You build the platform. Day 2 (ongoing): Nova analyzes issues, diagnoses problems, recommends fixes—you approve. As AI matures, more becomes autonomous.

This is a comprehensive, production-ready blueprint. We cover everything from architecture to production deployment with complete working examples, security, compliance, and troubleshooting.

- ⚡ Want the fast track? Jump to our automated setup script (platform deploys in 30 minutes)

- 🎯 Looking for specific topics? Use the navigation guide below to jump to what you need

- 📚 Want to understand every detail? Read through—it's structured as a comprehensive step-by-step walkthrough

Navigation Guide

Everything you need is here—jump to any section:

Three Platform Use Cases, One Architecture

The same technical architecture serves three completely different use cases. Whether you're building an internal developer platform for your engineering teams, launching a SaaS product to compete with Render or Fly.io, or meeting strict enterprise compliance requirements for healthcare or finance—the underlying platform is identical. What changes is simply the branding, access controls, and compliance configurations, not the core technology stack.

Internal Developer Platform

Give your teams self-service deployments without building a whole platform engineering team to maintain it

SaaS Product

Brand and sell to customers, just like Render and Fly.io started. Your platform, your pricing

Enterprise Platform

Meet data sovereignty, HIPAA, SOC2, PCI-DSS, or FedRAMP requirements for regulated industries

This flexibility is possible because AstroPulse handles all the complex Kubernetes orchestration while exposing simple, declarative configuration. You don't need deep infrastructure expertise—just a cloud account, a domain you control, and the willingness to follow a guided setup process.

Before You Begin: Prerequisites

What you'll need:

- AstroPulse Account - Complete the getting started guide: astropulse.io/get-started

- Cloud Account (AWS or GCP) - For this guide: AWS account with permissions to create EKS, VPC, ECR, Route53, IAM roles. Self-hosted Kubernetes clusters on AWS and GCP already supported. Managed GKE and AKS provisioning coming soon.

- Domain name that you own and can configure nameservers for

That's it! The setup process will guide you through everything else.

Understanding PaaS Architecture

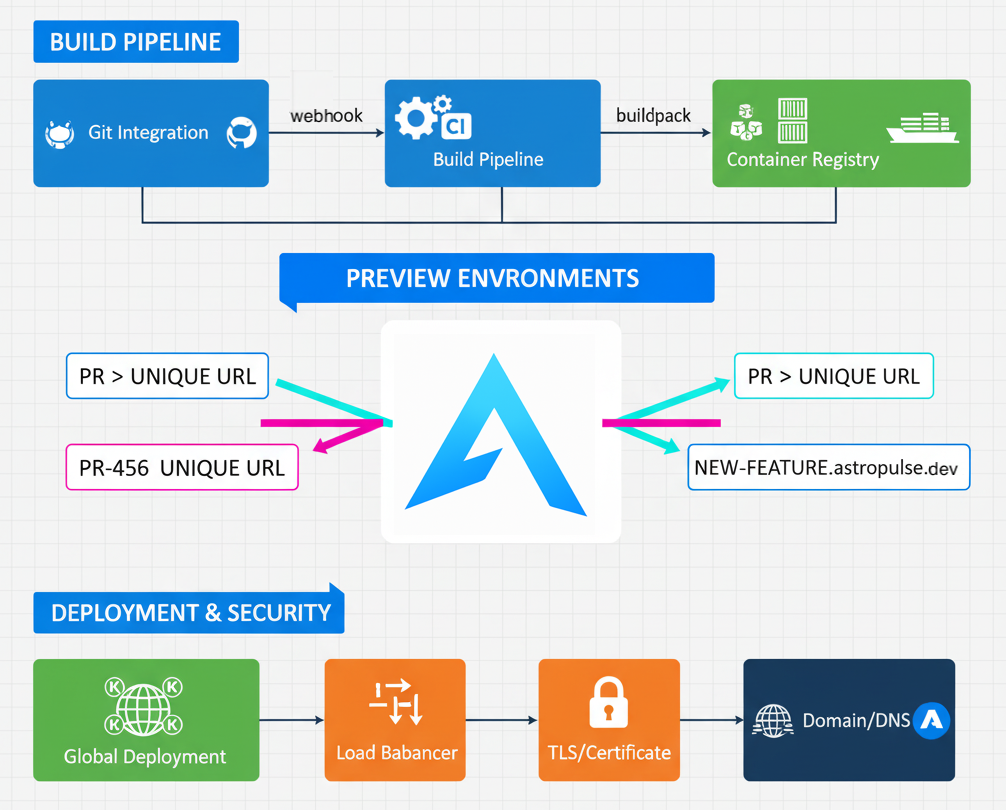

Modern PaaS platforms handle the complete application lifecycle from source code to production:

From Source to Production: Git integration with automatic deployments. Auto-detect frameworks and build containers. Automatic TLS certificates and DNS. Preview environments for every branch/PR.

Beyond Web Apps: Stateless services (APIs, web servers). Stateful workloads (databases, message queues). Background jobs and workers. Microservices without public endpoints.

Production Operations: Security scanning and vulnerability detection. Compliance auditing and access controls. Monitoring, logging, and alerting. Cost tracking and resource quotas.

This guide focuses on the deployment pipeline (source → build → deploy). Security scanning, compliance, and advanced operations are covered in separate guides.

The deployment flow:

Developer pushes code → Webhook triggers → Framework detected → Image built → App deploys → HTTPS URL ready

This guide shows you how to build the deployment pipeline. Security, compliance, and billing are optional add-ons you can integrate later.

Why Build Your Own Platform?

Hosted PaaS platforms popularized the "git push → live URL" workflow. But as organizations scale or need specific capabilities (microservices, stateful workloads, deployment control), three critical factors drive them to build their own platforms: cost, compliance, and ownership.

Cost Savings

At scale, commercial PaaS pricing becomes prohibitive. Running on your own infrastructure saves 50-70% at 100+ developers while maintaining the same developer experience

Compliance & Sovereignty

HIPAA, SOC2, PCI-DSS, FedRAMP requirements often prohibit third-party PaaS. Your own platform means data never leaves your control and audit trails are complete

Full Ownership

No vendor lock-in, no usage limits, no surprise pricing changes. Deploy on AWS, GCP, Azure, or on-premises. Your infrastructure, your rules, your timeline

But here's the traditional problem: Self-hosted platforms require dedicated platform engineering teams to build and maintain. That's where the economics break down for most organizations.

Until now.

Historically, enterprises used managed platforms like Vercel because building and operating infrastructure was too complex and expensive—even if it meant compromising on ownership, cost, compliance, and control.

The AI shift: With AI-assisted operations (like Nova), the operational complexity that forced companies toward managed platforms is now manageable. Enterprises can own their infrastructure without massive platform engineering teams.

The reality: Even with AI, foundational layers (deployment orchestration, build systems, security scanning) are still hard to build from scratch. AstroPulse provides these hard-to-build foundations + Nova AI handles the complex day-to-day operations = Ownership + control + cost savings WITHOUT the operational burden.

Start building now. As AI matures toward full autonomy, you'll own the infrastructure while AI eliminates the toil.

The Secret Weapon: Nova AI Co-Pilot

Here's what makes this approach fundamentally different: Nova acts as your Platform Engineer, SRE, and DevOps expert—eliminating the need for a dedicated platform team.

Debug Production Issues

Nova reads your cluster logs, understands your setup, identifies root causes, and guides you to fixes—all through natural conversation

Handle Incidents 24/7

2 AM production down? Nova diagnoses issues, provides remediation steps, and walks you through recovery—no platform team needed

Simplify Operations

Security scanning, compliance setup, cost optimization—Nova guides you through complex tasks and generates configurations automatically

This changes the economics of self-hosted platforms. You get Vercel-like simplicity on your own infrastructure without hiring a platform engineering team to build and maintain it.

The AI-powered future: Today, Nova works with human-in-the-loop approval. As AI capabilities advance, operational complexity will be eliminated entirely—AI agents will autonomously handle incidents, maintenance, and optimization. Organizations building their own platforms now will benefit from AI automation as it matures, owning their infrastructure while AI handles the complexity.

Getting Started: Your Foundation

Now that you understand what you're building and why it matters, let's look at what AstroPulse already provides out of the box—and what we'll add to complete the platform.

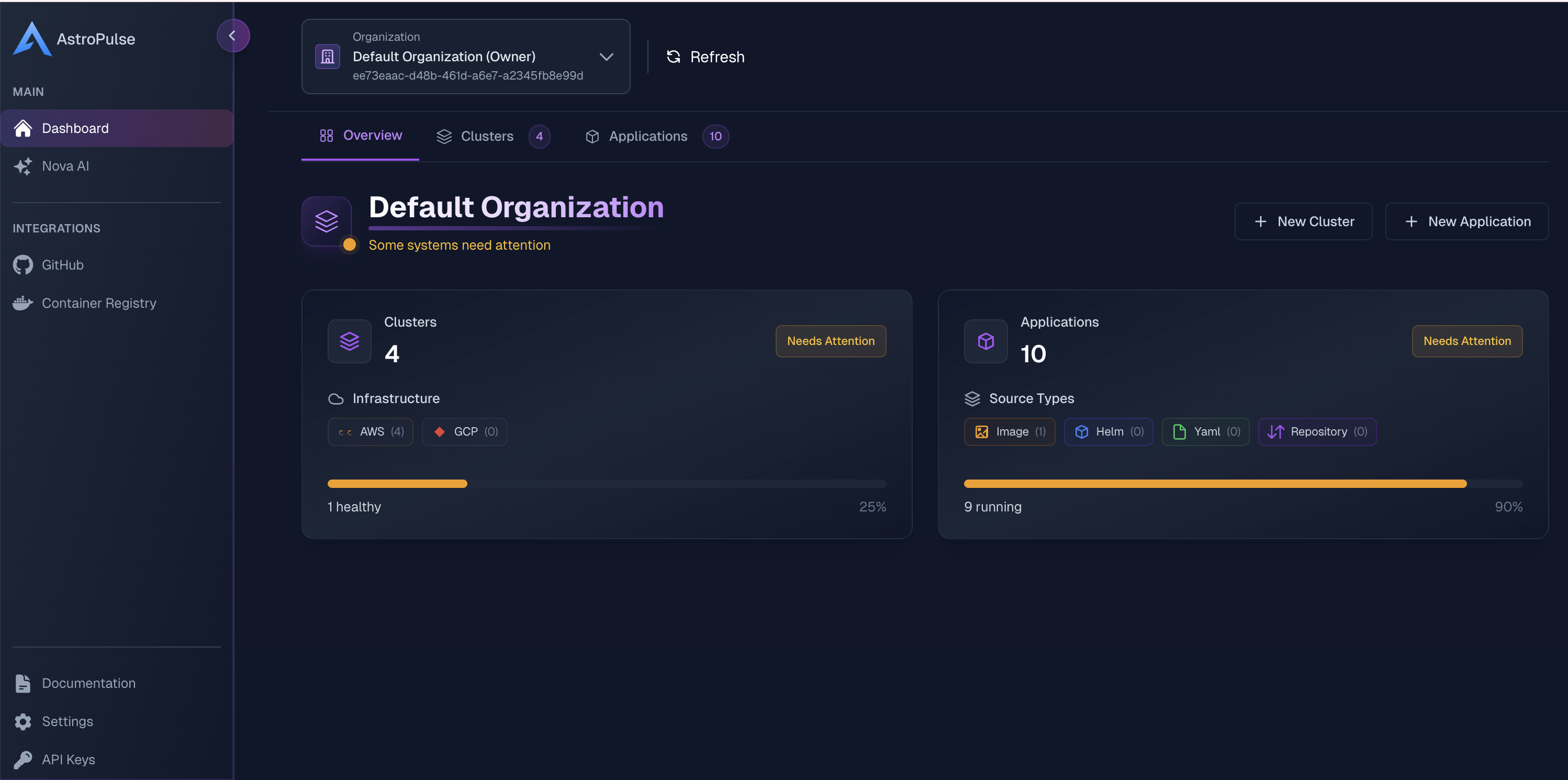

What You Already Have with AstroPulse

The good news is that AstroPulse already provides most of the infrastructure needed for a production-grade deployment platform:

Infrastructure Layer

Kubernetes (EKS, GKE, AKS, Self-Hosted)

Deploy and manage EKS clusters on AWS with unified astroctl interface. Managed GKE and AKS provisioning coming soon. Self-hosted Kubernetes clusters already supported

Application Deployment

Deploy from container images, Helm charts, Git repositories, or raw YAML with full lifecycle management

Nova AI Intelligence

AI-powered guidance for deployments, troubleshooting, and optimization

Unified Management

Single pane of glass for all clusters, applications, and deployments across environments

As demonstrated in the astro-platform-apps repository, AstroPulse excels at:

- Cluster provisioning with full control over compute, networking, and security

- Application lifecycle management through declarative YAML configurations

- Multi-environment deployments with profiles for dev, staging, and production

- Integrated observability with Prometheus, Grafana, and OpenTelemetry

Build Layer

AstroPulse prioritizes flexibility—you can deploy from container images, Helm charts, or raw YAML. This gives you full control over how applications are built and packaged.

For a Vercel-like experience (git push → automatic build → deploy), you need a source-to-image build layer. You can use:

- kpack (Cloud Native Buildpacks) - what we'll use in this guide

- Tekton (custom CI/CD pipelines)

- Jenkins X (GitOps-focused builds)

- Your own in-house build system (whatever works for you)

The key is separation: AstroPulse handles deployment, your build layer handles source → container image.

Using kpack for This Guide

kpack is a Kubernetes-native build service that uses Cloud Native Buildpacks. We'll use it in this guide because it's simple and Kubernetes-native, but you can swap it for any build technology.

Why kpack?

Kubernetes Native

Runs directly in your AstroPulse-managed cluster using CRDs and standard Kubernetes primitives

Auto-Rebuild

Automatically rebuilds images when source code changes OR when base images/buildpacks are updated

No Dockerfile Needed

Uses Cloud Native Buildpacks to detect and build applications automatically (Node.js, Python, Go, Java, etc.)

Production Ready

Built by the Paketo/Cloud Foundry community with enterprise-grade buildpacks and security practices

How kpack Works

kpack extends Kubernetes with custom resources:

- ClusterStack: Defines the base images (build and run)

- ClusterStore: Collection of buildpacks for different languages/frameworks

- ClusterBuilder: Combines stack + store into a reusable builder

- Image: Links your Git repo to a builder and destination registry

When you create an Image resource, kpack:

- Pulls your source code from Git

- Detects the application type (Node.js, Python, Go, etc.)

- Selects the appropriate buildpacks

- Builds a production-ready container image

- Pushes it to your container registry

- Automatically rebuilds on code or base image changes

Multi-Language Support: kpack automatically detects and builds applications in Node.js, Python, Java, Go, .NET, Ruby, PHP, and more—with zero configuration. No Dockerfile required. Just push your code, and kpack handles the rest using Cloud Native Buildpacks.

Platform Architecture

Here's how all the pieces fit together:

Complete Setup Walkthrough

This section covers the entire platform setup from infrastructure to application deployment. We'll approach this from two personas:

👷 Platform Engineer

What they build: The platform infrastructure itself

One-time setup:

- Deploy Kubernetes cluster (EKS, or self-hosted on AWS/GCP)

- Install kpack, cert-manager, external-dns, nginx-ingress

- Configure Application Profiles

- Set up IAM roles and permissions

Repository: paas-platform-example

Focus: Infrastructure, security, compliance, platform reliability

💻 Application Developer

What they deploy: Their applications on the platform

Daily workflow:

- Write application code

- Add

.astropulse/folder to their repo - Push code → GitHub Actions deploys automatically

- Monitor deployments

Repository: latency-app

Focus: Application features, deployment speed, developer experience

Platform Engineer: Setup Infrastructure

Choose Your Path

🚀 Script-Based Setup (Recommended)

Run one script that executes all steps automatically in ~30-40 minutes:

./setup-platform.sh --deploy

The script handles: cluster creation, CloudFormation stack, all platform services deployment.

Perfect for: Getting started quickly, production deployments

📚 Step-by-Step Walkthrough

Follow each step below to understand what the script does. Same result, but you learn how each component works.

The sections below explain:

- What each service does

- How to configure it

- How components work together

Perfect for: Learning the architecture, custom configurations

Both paths get you the same production-ready platform.

Step 1: Create Your Kubernetes Cluster

⏱️ Time: ~15-20 minutes | 📍 Progress: Step 1 of 3

First, provision a production-ready EKS cluster with dedicated node groups for different workload types. We've created a production-grade configuration in the paas-platform-example.

This example uses AWS EKS, but AstroPulse supports Kubernetes across multiple cloud providers. Self-hosted Kubernetes clusters on AWS and GCP are already supported. Managed GKE and AKS provisioning coming soon.

📚 Documentation:

- Cluster Management Guide - All supported configurations

- EKS Networking Architecture - VPC, subnets, and networking details

- Data Plane Configuration - Node group specifications

💻 Using CLI (astroctl)

Our production cluster configuration uses 3 dedicated node groups for workload isolation:

📝 What You Need to Customize

accountId- Replace"ACCOUNT_ID"with your AWS account IDregion- Changeus-west-2to your preferred AWS region- Node group sizes - Adjust

minNode/maxNodebased on your workload

Note: The setup-platform.sh script automatically replaces these values from your config.env.

View cluster/paas-cluster.yaml - Production EKS Cluster Configuration

Create the cluster:

$ astroctl clusters apply -f cluster/paas-cluster.yaml

Why 3 node groups? System nodes provide isolated infrastructure services that prevent build and app workloads from affecting platform stability. Build nodes use CPU-optimized (m5.xlarge) instances with auto-scaling 2-10 for cost efficiency during builds. App nodes provide scalable application hosting with proper resource limits.

🤖 Using Nova AI

Example Nova prompts:

💬 "I need a production Kubernetes cluster on AWS for running a PaaS platform with kpack builds. What node groups and instance types should I use?"

💬 "Generate an EKS cluster configuration for us-west-2 with separate node groups for system services, builds, and applications"

💬 "What's the recommended cluster sizing for 50+ concurrent build jobs and 100 deployed applications?"

Nova AI can help with: Recommending optimal node instance types based on your workload, generating cluster YAML configurations with proper taints and labels, explaining networking and security best practices for multi-tenant platforms, and estimating monthly costs for different cluster configurations.

Real Answer: For 50+ concurrent builds and 100 apps, Nova might suggest: 3-5 m5.large for system, 5-15 m5.xlarge for builds, 10-30 m5.xlarge for apps. Cost varies by cloud provider, region, and whether you use spot/reserved instances.

💬 "Create a production EKS cluster configuration for us-west-2 with separate node groups for system services, kpack builds, and application workloads"

Nova can generate the complete cluster YAML with proper node taints, labels, and sizing recommendations.

Create Application Profile:

After the cluster is provisioned, create an Application Profile to deploy applications to this cluster:

View app-profiles/paas-platform-profile.yaml - Application Profile

$ astroctl profiles apply -f app-profiles/paas-platform-profile.yaml

An Application Profile connects your applications to a specific cluster. All application YAML files reference this profile via profileName: paas-platform-profile. This allows you to deploy the same application configuration to different environments (dev, staging, prod) by simply changing the profile name.

Step 2: Deploy AWS Infrastructure

⏱️ Time: ~3-5 minutes | 📍 Progress: Step 2 of 3

Now that the cluster exists, deploy AWS infrastructure: ECR repositories, Route53, IAM roles with IRSA.

$ # Set your configuration variables DOMAIN_NAME="your-domain.com" # CHANGE THIS CLUSTER_NAME="paas-platform-prod" AWS_REGION="us-west-2" # Get OIDC provider ID from your cluster OIDC_ID=$(aws eks describe-cluster --name $CLUSTER_NAME --region $AWS_REGION --query "cluster.identity.oidc.issuer" --output text | cut -d/ -f5) # Deploy CloudFormation stack aws cloudformation create-stack \ --stack-name paas-platform-infrastructure \ --template-body file://infrastructure/cloudformation/paas-infrastructure.yaml \ --parameters \ ParameterKey=DomainName,ParameterValue=$DOMAIN_NAME \ ParameterKey=EKSClusterName,ParameterValue=$CLUSTER_NAME \ ParameterKey=EKSClusterOIDCProvider,ParameterValue=$OIDC_ID \ ParameterKey=AWSRegion,ParameterValue=$AWS_REGION \ --capabilities CAPABILITY_NAMED_IAM \ --region $AWS_REGION

What this creates: ECR repositories, Route53 hosted zone, IAM roles for cert-manager/external-dns/kpack with IRSA

CloudFormation creates IAM roles with OIDC trust policies. The setup-platform.sh script automatically retrieves these role ARNs from CloudFormation outputs and configures the Kubernetes ServiceAccounts with eks.amazonaws.com/role-arn annotations. This gives pods AWS permissions without storing credentials.

View infrastructure/cloudformation/paas-infrastructure.yaml

Step 3: Deploy Platform Services

⏱️ Time: ~10-15 minutes | 📍 Progress: Step 3 of 3

Now deploy the essential infrastructure services using AstroPulse's application interface - this makes everything managed and declarative.

Deploy cert-manager

cert-manager provides automatic TLS certificate management for your platform.

Before deploying: Update cert-manager.yaml:

eks.amazonaws.com/role-arn- UpdateACCOUNT_IDwith your AWS account ID

View apps/core-services/cert-manager.yaml - cert-manager Deployment

$ astroctl app apply -f apps/core-services/cert-manager.yaml && astroctl app status -n cert-manager -w

💬 "Deploy cert-manager for automatic TLS certificate management using AstroPulse application interface"

Nova provides step-by-step guidance for cert-manager deployment and configuration.

Deploy TLS Certificates

📝 What You Need to Customize

email- Inletsencrypt-prod.yaml, replaceadmin@your-domain.comwith your email (for cert expiration notifications)region- Update Route53 region if different fromus-west-2dnsNames- Inwildcard-cert.yaml, replace*.your-domain.comandyour-domain.comwith your actual domainrepoURL- Inletsencrypt-issuer.yaml, point to your forked repository

View resources/cert-manager/letsencrypt-prod.yaml - Production ClusterIssuer

View resources/cert-manager/wildcard-cert.yaml - Wildcard Certificate

View apps/core-services/letsencrypt-issuer.yaml - Deploy TLS Infrastructure

$ astroctl app apply -f apps/core-services/letsencrypt-issuer.yaml && astroctl app status -n letsencrypt-issuer -w

Start with letsencrypt-staging ClusterIssuer to test your setup and avoid hitting Let's Encrypt rate limits. Once verified, switch to letsencrypt-prod for production certificates.

Preview and app subdomains (e.g., latency-app-xyz.your-domain.com) require a wildcard certificate (*.your-domain.com) via cert-manager using a DNS-01 solver. This ensures HTTPS works automatically for all dynamic subdomains. First issuance may take a few minutes due to DNS propagation.

TLS Termination Strategy: The wildcard certificate is stored in a Kubernetes secret (wildcard-tls) and nginx-ingress uses it for TLS termination at the ingress controller level. This means individual application Ingress resources don't need to manage certificates—they simply reference the wildcard secret.

Deploy external-dns

external-dns provides automatic DNS record management, syncing Ingress resources to your DNS provider (Route53, Cloud DNS, Azure DNS).

Why domain ownership matters: external-dns creates a DNS hosted zone (Route53 for AWS, Cloud DNS for GCP, Azure DNS for Azure) and requires you to update nameservers at your domain registrar. You need:

- A registered domain (from GoDaddy, Namecheap, Google Domains, etc.)

- Access to update nameservers at your registrar

- Domain not already delegated to another cloud provider account

Verification: Before deploying external-dns, confirm you can log into your domain registrar and update DNS nameservers. The CloudFormation stack created a hosted zone—you'll need to update your domain's nameservers to point to it.

DNS Provider Examples:

- AWS: Route53 hosted zone → Update nameservers to AWS NS records

- GCP: Cloud DNS zone → Update nameservers to Google Cloud NS records

- Azure: Azure DNS zone → Update nameservers to Azure NS records

Don't have a domain? Register one at Namecheap, GoDaddy, or Google Domains.

📝 What You Need to Customize

eks.amazonaws.com/role-arn- UpdateACCOUNT_IDwith your AWS account IDaws.region- Update to match your AWS regiondomainFilters- Replaceyour-domain.comwith your actual domaintxtOwnerId- Replace with your cluster name (e.g.,paas-platform-prod)

View apps/core-services/external-dns.yaml - Automatic DNS Management

$ astroctl app apply -f apps/core-services/external-dns.yaml

💬 "Configure external-dns for AWS Route53 with automatic DNS record creation for my domain"

Nova helps configure DNS provider settings and IAM permissions correctly.

Deploy nginx-ingress

nginx-ingress provides HTTP/HTTPS routing and load balancing for all your applications.

If you prefer using AWS Certificate Manager instead of cert-manager with Let's Encrypt, you can configure nginx-ingress to use AWS ACM certificates via AWS Load Balancer annotations. This example uses cert-manager for a fully Kubernetes-native approach, but ACM is a valid alternative for AWS environments.

View apps/core-services/nginx-ingress.yaml - HTTP/HTTPS Routing

$ astroctl app apply -f apps/core-services/nginx-ingress.yaml

💬 "Deploy nginx-ingress controller with AWS NLB and configure it for high-availability production workloads"

Nova recommends optimal nginx-ingress settings for your traffic patterns.

Deploy kpack

kpack is the core component that provides source-to-image builds using Cloud Native Buildpacks.

View apps/core-services/kpack.yaml - Deploy kpack Build Controllers

$ astroctl app apply -f apps/core-services/kpack.yaml && astroctl app status -n kpack -w

💬 "Deploy kpack v0.13.0 with Cloud Native Buildpacks for automatic application builds from source code"

Nova can deploy and configure kpack with the right buildpacks for your tech stack via MCP.

Configure kpack build infrastructure

📝 What You Need to Customize

eks.amazonaws.com/role-arn- Inresources/kpack/service-account.yaml, updateACCOUNT_IDwith your AWS account IDtag- Replaceyour-registry.com/kpack-builderwith your container registry URLserviceAccountRef.name- Should match your kpack service account

View apps/core-services/kpack-config.yaml - Deploy kpack Configurations via GitOps

$ astroctl app apply -f apps/core-services/kpack-config.yaml

💬 "Configure kpack ClusterStack, ClusterStore, and ClusterBuilder for Node.js, Python, Go, Java, and other popular languages"

Nova generates complete kpack configurations with all necessary buildpacks.

✅ Why Deploy via AstroPulse Applications?

- Declarative: All infrastructure is YAML-defined and version-controlled

- Unified Management: Use

astroctl appcommands to manage everything consistently - Lifecycle Management: Easy updates, rollbacks, and monitoring

- Multi-Cluster: Same configs work across dev, staging, production

- GitOps Ready: Integrate with your CI/CD pipelines

Instead of manually running helm/kubectl commands, everything is managed as an AstroPulse Application with full lifecycle support.

Application Developer: Daily Workflow

⏱️ Time: ~15 minutes first deploy | ~3-5 minutes subsequent deploys

Now that the platform is ready, let's see how application developers use it to deploy their apps.

The .astropulse/ Standard

Every application follows the same folder structure:

$ tree -L 3 -a my-app/ my-app/ ├── src/ # Your application code ├── .astropulse/ # Deployment configuration │ ├── profile.yaml # Application profile (connects to cluster) │ ├── build-app.yaml # AstroPulse Application (build) │ ├── deploy-app.yaml # AstroPulse Application (deploy) │ └── resources/ │ └── kpack-image.yaml # kpack build config └── .github/ # GitHub Actions (optional) │ └── workflows/ │ └── deploy.yml # Automated deployment workflow

Why this pattern?

- ✅ Consistent - Every app uses the same structure

- ✅ Self-contained - All deployment config lives with your code

- ✅ GitOps native - Config is version controlled

- ✅ Self-service - Developers deploy independently

Complete Example: latency-app

Let's walk through a complete example using our latency-app repository.

The complete flow:

- Create application profile → connects app to cluster

- Deploy kpack Image resource → kpack builds container

- Deploy application → AstroPulse creates all resources

- Your app is live with HTTPS!

File 1: Application Profile

View .astropulse/profile.yaml - Application Profile

The application profile connects your app to a specific cluster. Update clusterName to match your cluster name from platform setup. This profile is separate from the platform services profile (paas-platform-profile), giving you isolation and flexibility.

File 2: Build Configuration

View .astropulse/build-app.yaml - Build Configuration

File 3: kpack Image Resource

View .astropulse/resources/kpack-image.yaml - kpack Build Config

File 4: Deployment Configuration

View .astropulse/deploy-app.yaml - Deployment (type: image)

File 5: GitHub Actions Automation

View .github/workflows/deploy.yml - Automated Deployment

💬 "Generate a complete .astropulse/ folder structure for my Node.js application with automatic builds and deployment"

Nova creates all four configuration files customized for your application. Learn more →

📝 What You Need to Customize

- Cluster Name - In

.astropulse/profile.yaml- Match your cluster from platform setup - Profile Name - In

.astropulse/build-app.yamland.astropulse/deploy-app.yaml - AWS Account ID & Region - In

.astropulse/deploy-app.yamland.astropulse/resources/kpack-image.yaml - Domain Name - In

.astropulse/deploy-app.yaml- Replaceyour-domain.com - Git Repository URL - In

.astropulse/build-app.yamland.astropulse/resources/kpack-image.yaml

Easy way: Use the automated deploy-app.sh script which updates all these values automatically!

See the latency-app README for detailed instructions.

💬 "Deploy my latency-app application using the .astropulse/ configuration and verify it's running with TLS"

Nova handles the entire deployment flow and verifies all components are working.

Before you start: Setup your application

⚠️ One-Time Setup Required

First time setup:

- Fork the latency-app repository to your GitHub account

- Clone your fork locally

- Read the README.md - It contains detailed instructions for customizing all values

- Update configuration in

.astropulse/files:- Cluster name in

profile.yaml - AWS Account ID & Region

- Your domain name

- Your Git repository URL (your fork, not the original)

- Cluster name in

- Commit and push your changes to your fork

- Deploy once manually to verify everything works:

astroctl app profile apply -f .astropulse/profile.yaml

astroctl app apply -f .astropulse/build-app.yaml

astroctl app apply -f .astropulse/deploy-app.yaml

After this initial setup, the Git workflow becomes fully automated!

Easier option: Use the deploy-app.sh script from paas-platform-example which handles all configuration updates automatically.

Developer workflow (after setup):

The Vercel-like experience - just push code:

$ # Make changes to your application vim main.go # Commit and push git add . git commit -m 'Add new feature' git push origin main # That's it! kpack automatically: # 1. Detects Git commit # 2. Builds new container image # 3. Pushes to ECR with :latest tag # 4. GitHub Actions (if enabled) deploys automatically

What happens automatically:

- kpack watches your Git repo - Detects new commits on

mainbranch - Builds container image - Uses Cloud Native Buildpacks (3-5 min)

- Pushes to registry - Updates

your-registry.com/paas-apps/latency-app:latest - GitHub Actions deploys - (If enabled) Waits for build, then deploys new image

The GitHub Actions workflow builds and deploys with both :latest and Git SHA tags for production-grade deployments:

On every push, the workflow:

-

Builds image with dual tags - kpack pushes to both

:latestand:<git-sha>(e.g.,:a1b2c3d)# kpack builds and pushes both tags automatically

tag: xxx.dkr.ecr.us-west-2.amazonaws.com/paas-apps/latency-app:latest

additionalTags:

- xxx.dkr.ecr.us-west-2.amazonaws.com/paas-apps/latency-app:a1b2c3d -

Deploys using Git SHA tag - Updates

deploy-app.yamlto use the versioned tag# Deployment uses the specific Git SHA, not :latest

image:

tag: a1b2c3d # Production-grade: reproducible, auditable, rollback-friendly

Why both tags?

:latest- For manual testing and development workflows:<git-sha>- For production deployments with reproducibility and easy rollbacks

Manual deployment: Use :latest tag (already configured in the repo files)

astroctl app apply -f .astropulse/deploy-app.yaml # Uses :latest

Automated deployment: GitHub Actions automatically uses Git SHA tags (production-grade)

See the full workflow implementation: .github/workflows/deploy.yml

The latency-app includes a GitHub Actions workflow (.github/workflows/deploy.yml):

Setup (one-time):

- Add

ASTROPULSE_API_KEYto GitHub Secrets - Update trigger: change

workflow_dispatchtopush: branches: [main]

Then every push to main automatically:

- ✅ Waits for kpack to finish building

- ✅ Deploys new image to your cluster

- ✅ Verifies deployment is running

- ✅ Zero-downtime rolling update

The workflow is disabled by default - you can deploy manually with astroctl app apply or enable it for full GitOps automation.

For private registries, use IRSA (AWS) / Workload Identity (GCP) / Managed Identity (Azure) for image pulls, falling back to imagePullSecrets where needed. Manage application secrets via External Secrets Operator to avoid storing credentials in manifests.

Security & Compliance

AstroPulse gives you the foundation: The base platform (cluster, DNS, TLS, application deployment, monitoring).

Production-grade platforms need security: Vulnerability scanning, policy enforcement, and compliance controls are critical for production workloads.

Deploy Trivy Operator

View apps/core-services/trivy-operator.yaml - Security Vulnerability Scanning

$ astroctl app apply -f apps/core-services/trivy-operator.yaml && astroctl app status -n trivy-system -w

💬 "Deploy Trivy Operator for continuous vulnerability scanning of all container images and Kubernetes configurations"

Nova can help configure Trivy scanning policies and integrate with your CI/CD pipeline.

Deploy Kyverno

View apps/core-services/kyverno.yaml - Policy Enforcement Engine

$ astroctl app apply -f apps/core-services/kyverno.yaml && astroctl app status -n kyverno -w

💬 "Deploy Kyverno for policy enforcement and admission control in Kubernetes"

Nova can help you write custom policies for your compliance requirements.

Deploy Security Policies

View resources/kyverno/image-scanning-policy.yaml - Security Policies

$ astroctl app apply -f apps/core-services/kyverno-policies.yaml

💬 "Configure Kyverno policies to block container images with CRITICAL vulnerabilities and enforce security best practices"

Nova helps you customize policies for HIPAA, SOC2, PCI-DSS, or other compliance frameworks.

✅ What You Just Deployed

- ✅ Trivy Operator - Automatically scans all container images for CVE vulnerabilities

- ✅ Kyverno - Policy enforcement engine with admission control

- ✅ Security Policies:

- Block images with CRITICAL vulnerabilities

- Require images from trusted registries (your ECR)

- Enforce resource limits on all containers

- Disallow privileged containers

View vulnerability reports:

kubectl get vulnerabilityreports -A

kubectl get configauditreports -A

Your platform is now production-ready with:

- ✅ Continuous vulnerability scanning

- ✅ Policy enforcement at admission time

- ✅ Compliance-ready audit trails

- ✅ Security best practices enforced automatically

Observability Stack

Deploy a complete observability stack with Prometheus (metrics), Grafana (visualization), and ClickHouse (analytics database for logs/events).

- Platform-specific configs:

paas-platform-example/apps/core-services/(cert-manager, kpack, nginx, etc.) - Reusable observability apps: Root-level

apps/folder (prometheus, grafana, clickhouse)

What You'll Monitor:

- Platform: cert-manager certificates, nginx-ingress requests, external-dns sync, kpack builds

- Applications: CPU/memory usage, HTTP latency, error rates, pod health

- Kubernetes: Node utilization, pod scheduling, network traffic, storage I/O

Deploy Observability Stack

⚠️ Different Folder - Requires Customization

Important: Unlike the core services (cert-manager, kpack, etc.), these observability apps are from the root-level apps/ folder, NOT from paas-platform-example/.

You need to update each YAML file:

profileName- Update topaas-platform-profileto deploy to your cluster

View deployment configurations

Expose Grafana with Ingress (Optional):

To access Grafana via public URL, deploy the Ingress-enabled version:

📝 What You Need to Customize

hosts- Replacegrafana.your-domain.comwith your actual domainadminPassword- IMPORTANT: Change the default password to a secure value before deploying!

View apps/core-services/grafana-ingress.yaml - Grafana with Ingress

$ astroctl app apply -f apps/core-services/grafana-ingress.yaml

Access Grafana:

- URL:

https://grafana.your-domain.com(external-dns creates DNS record automatically) - Default credentials:

admin/admin(change on first login)

Import pre-built dashboards:

- Kubernetes cluster monitoring (ID: 7249)

- NGINX Ingress controller (ID: 9614)

Grafana is configured with persistent storage (100Gi) using your cluster's default storage class:

- EKS (AWS): Uses

gp2orgp3by default - GKE (Google Cloud): Uses

pd-standardby default - AKS (Azure): Uses

defaultstorage class

The PersistentVolumeClaim will be automatically created when Grafana is deployed.

💬 "Deploy and configure Prometheus, Grafana, and ClickHouse for my platform observability"

Nova can deploy these tools directly via MCP or guide you through manual deployment with production best practices. Learn more →

What you get:

- ✅ Continuous vulnerability scanning - Every image scanned automatically

- ✅ Policy enforcement - Security policies enforced at admission time

- ✅ Complete observability - Metrics, dashboards, and alerting

- ✅ Compliance ready - Audit trails and policy reports

- ✅ Production hardened - Enterprise-grade security and monitoring

Production Considerations

Before going live with your PaaS platform, here are the critical production hardening steps:

1. Container Registry Security

All IAM permissions are configured via CloudFormation:

✅ Everything via Infrastructure as Code

For AWS (CloudFormation): The complete example includes a CloudFormation template that creates ALL AWS resources:

- IAM roles for kpack, external-dns, cert-manager (with IRSA trust policies)

- ECR repositories for apps and builder images

- Route53 hosted zone for your domain

- All IAM policies with proper least-privilege permissions

View infrastructure/cloudformation/paas-infrastructure.yaml - Complete AWS Infrastructure

💬 "Review my CloudFormation template for security best practices and recommend IAM policy improvements"

Nova analyzes your infrastructure as code for security vulnerabilities.

2. Security Stack: Vulnerability Scanning + Policy Enforcement

✅ Security Best Practices

- Trivy Operator - Continuous vulnerability scanning of all container images

- Kyverno policies - Block deployments with CRITICAL vulnerabilities

- Policy enforcement - Require security labels, resource limits, and non-root containers

- Compliance reporting - Automated audit trails for SOC2/PCI-DSS/HIPAA

See the Security & Compliance section for deployment instructions.

💬 "Review my security policies and recommend improvements for compliance requirements"

Nova helps configure security scanning and policy enforcement for your compliance framework.

3. Build Performance & Cost Optimization

✅ Performance & Cost Features

- Build caching - ClusterBuilder with cache volumes for 10x faster rebuilds

- Resource limits - LimitRange policies prevent runaway build costs

- Spot instances - Build nodes use 70% spot instances (77% cost reduction)

- Auto-scaling - Node groups scale 1-10 based on actual build demand

Impact: First build takes 5 minutes, subsequent builds complete in 30-60 seconds. Optimized caching and spot instances significantly reduce costs.

💬 "Analyze my kpack build performance and recommend optimizations for faster builds and lower costs"

Nova identifies build bottlenecks and suggests caching strategies.

4. High Availability & Disaster Recovery

✅ HA Features Built-In

- Multi-AZ node groups - All node groups span 3 availability zones

- Minimum 2-3 replicas - System services run with HA configurations

- Pod anti-affinity - Critical services spread across zones

- GitOps for DR - All configurations in Git for instant recovery

💬 "Review my cluster configuration for high availability and disaster recovery best practices"

Nova validates your HA setup and suggests improvements for resilience.

5. Complete Cost Breakdown

Infrastructure costs scale with usage (workload, not team size). Use spot instances and auto-scaling to optimize.

Traditional self-hosted platforms fail due to operational overhead: 2-5 platform engineers ($300K-1.25M/year), on-call rotations, maintenance toil, and burnout.

With AstroPulse + Nova AI: Infrastructure costs + subscription. Nova eliminates the need for a dedicated platform team by handling debugging, maintenance, troubleshooting, and optimization autonomously.

Operations & Debugging

Traditional platforms require dedicated teams. This platform is different - Nova AI acts as your platform engineer, SRE, and on-call engineer.

How Nova Helps with Operations

- Debugging - "My build is failing" → Nova analyzes logs, identifies root cause, suggests fixes

- Troubleshooting - Certificate renewals, networking issues, performance problems → Nova diagnoses and resolves

- Optimization - Cost analysis, resource tuning, performance improvements → Nova recommends changes

- 24/7 Support - No on-call rotation needed, Nova is always available

You work via Nova AI, CLI, or UI - Choose what works best for each task.

Common Troubleshooting Scenarios

Build Failures

Check build status:

$ astroctl app get nodejs-build astroctl app logs nodejs-build

💬 "My kpack build is failing with 'buildpack detect failed' error. Diagnose the issue and provide the fix."

Nova analyzes build logs and provides root cause analysis with fixes. Learn more →

Certificate Provisioning Issues

Check certificate status:

$ astroctl app get letsencrypt-issuer astroctl app logs cert-manager

💬 "My Let's Encrypt certificate is stuck in Pending state. Troubleshoot DNS-01 challenge and fix the issue."

Nova debugs cert-manager and DNS configuration problems.

DNS Not Working

Verify DNS configuration:

$ astroctl app get external-dns astroctl app logs external-dns

💬 "My application is deployed but DNS is not resolving. Troubleshoot external-dns and Route53 configuration."

Nova checks DNS records, nameserver delegation, and provider settings.

Application Deployment Errors

Check application status:

$ astroctl app get nodejs-app astroctl app logs nodejs-app

💬 "My application deployment shows ImagePullBackOff error. Diagnose registry authentication and provide step-by-step fix."

Nova troubleshoots deployment issues and registry permissions.

Frequently Asked Questions

🤔 Why build your own when Vercel/Netlify exist?

Vercel brought incredible developer experience to the market. This platform brings that same simplicity to environments where managed services can't be used:

When you NEED your own platform:

- Air-gapped / On-premises: Government, defense, financial institutions with network isolation requirements

- Data Sovereignty: Healthcare (HIPAA), finance (SOX/PCI-DSS), EU data residency (GDPR)

- Enterprise Compliance: FedRAMP, SOC2, industry-specific regulations

- SaaS Product: Build and sell deployment services under your own brand (you can't resell Vercel)

- Internal Developer Platform: Give your teams Vercel-like experience on your infrastructure, your policies, your cloud accounts

- Multi-cloud / Hybrid: Need to run on AWS, GCP, Azure, and on-premises from one platform

The Problem: These environments traditionally meant hiring platform engineering teams, on-call rotations, and endless operational toil.

The Solution: AstroPulse + Nova AI gives you Vercel-like simplicity in your own environment, without the operational burden.

💰 What's the total cost of running this platform?

Cost depends on your scale and cloud provider:

- Small teams (10 devs): Modest infrastructure (few nodes, minimal auto-scaling)

- Medium teams (25-50 devs): Moderate infrastructure with auto-scaling

- Large teams (100+ devs): Production-grade with HA across multiple availability zones

What affects cost:

- Number of nodes (system, build workers, app runtime)

- Instance types (use spot instances for builds to save 60-70%)

- Auto-scaling configuration (aggressive vs conservative)

- High availability requirements (multi-AZ deployments)

- Cloud provider and region

Cost includes: Kubernetes control plane, compute instances, load balancers, DNS, data transfer

The real cost difference:

- Infrastructure cost: Predictable monthly cloud spend (scales with workload, not team size)

- Traditional operational cost: 2-5 platform engineers ($300K-1.25M/year) + on-call + toil

- With Nova AI: Infrastructure + AstroPulse subscription (no platform engineering team needed)

Bottom line: Without Nova AI, you'd need a dedicated platform team making this approach expensive. With Nova handling operations, you get the platform without the operational burden.

🛡️ Is this production-ready? What about security and compliance?

Yes, production-ready. Deploy security and compliance in minutes:

Security Stack:

- Trivy Operator - CVE scanning, configuration audits, SBOM generation

- Kyverno - Policy enforcement (blocks privileged containers, requires resource limits, prevents HIGH/CRITICAL CVEs)

- Observability - Prometheus, Grafana, ClickHouse for metrics and monitoring

Production Features: Multi-AZ HA, automated TLS, IRSA/IAM best practices, network security, encryption at rest, audit logging, zero-trust networking.

Compliance Templates: Pre-configured Kyverno policies for HIPAA, SOC2, PCI-DSS, FedRAMP. Apply YAML files to enforce compliance requirements automatically.

🔧 What languages and frameworks are supported?

Automatically detected and built:

- Node.js - Express, Next.js, Nest.js, Fastify (14, 16, 18, 20, 22)

- Python - Django, Flask, FastAPI, Pyramid (3.8-3.12)

- Java - Spring Boot, Quarkus, Micronaut (8, 11, 17, 21)

- Go - Gin, Echo, Fiber, standard lib (1.18-1.22)

- And more - .NET, Ruby, PHP via Cloud Native Buildpacks

No Dockerfile required - Cloud Native Buildpacks detect and build automatically.

Custom builds: Use GitHub Actions, Jenkins, or any CI/CD tool you prefer.

⏱️ How long does it really take to set this up?

Platform infrastructure: 30 minutes

- Cluster creation: 8-10 minutes

- cert-manager, external-dns, nginx-ingress, kpack: 15-20 minutes

First application deployment: 15 minutes

- kpack build: 3-5 minutes

- Deployment + DNS + TLS: 5-10 minutes

Total: ~45 minutes from empty AWS account to first app live with HTTPS

Subsequent deployments: 3-5 minutes per app (with cached builds: 30-60 seconds)

📚 Do I need Kubernetes expertise?

No deep Kubernetes expertise required. AstroPulse abstracts most complexity:

What you DON'T need to know:

- Kubernetes API internals

- Custom Resource Definitions (CRDs)

- Advanced networking (CNI, service mesh)

- Helm chart development

What helps (but Nova AI can guide you):

- Basic YAML syntax

- Understanding of containers/images

- Git workflows

- DNS concepts (nameservers, A records)

Nova AI helps with: Cluster sizing, troubleshooting, optimizations, security best practices

🌐 Can I use this on GCP, Azure, or self-hosted Kubernetes instead of AWS?

Yes! The architecture is cloud-agnostic.

Current Support Status:

- AWS (EKS): Managed cluster provisioning available now ✅ Docs →

- AWS (Self-Hosted): Self-hosted Kubernetes clusters on AWS already supported ✅ Docs →

- GCP (Self-Hosted): Self-hosted Kubernetes clusters on GCP already supported ✅ Docs →

- GCP (GKE): Managed cluster provisioning coming soon 🚧

- Azure (AKS): Managed cluster provisioning coming soon 🚧

- Azure (Self-Hosted): Self-hosted Kubernetes clusters on Azure coming soon 🚧

Connect any existing Kubernetes cluster (kubeadm, k3s, RKE, Rancher, OpenShift, etc.) on supported cloud providers. See the Cluster Management Guide for complete details.

Why is this cloud-agnostic?

Because everything runs on Kubernetes, which provides a unified API across all cloud providers. Whether you deploy to EKS (AWS), GKE (Google Cloud), AKS (Azure), or self-hosted Kubernetes, the platform components (kpack, cert-manager, nginx-ingress) see the same Kubernetes API.

How AstroPulse manages the cloud-specific complexity:

AstroPulse abstracts away the foundational differences between cloud providers so you don't have to deal with them:

- IAM Integration: IRSA (AWS) vs Workload Identity (GCP) vs Managed Identity (Azure)—you write the same ServiceAccount config, AstroPulse handles the cloud-specific identity binding

- Load Balancers: AWS ALB annotations vs GCP GLB vs Azure LB—AstroPulse translates to the right cloud provider's load balancer

- Storage Classes: EBS (AWS) vs Persistent Disk (GCP) vs Azure Disk—unified storage configuration that maps to cloud-specific volumes

- Network Plugins: VPC CNI (AWS) vs Kubenet (Azure) vs GKE's native networking—Kubernetes networking works consistently across all

- DNS Integration: Route53 (AWS) vs Cloud DNS (GCP) vs Azure DNS—external-dns automatically syncs with the right provider

The value: Write once, deploy anywhere. You define your platform in YAML once, and AstroPulse handles translating that intent to work with your cloud provider's specifics. No rewrites when migrating between clouds.

What changes when switching clouds:

- Cluster provisioner: EKS → GKE or AKS (in cluster config only)

- DNS provider: Route53 → Cloud DNS or Azure DNS (external-dns config)

- Container registry: ECR → Artifact Registry or ACR (image URLs)

- Identity/Auth: IRSA → Workload Identity or Managed Identity (behind the scenes)

What stays exactly the same:

- kpack, cert-manager, external-dns, nginx-ingress (all cloud-agnostic Kubernetes tools)

- AstroPulse application management and deployment workflows

- Developer workflow (

.astropulse/pattern, Git push → deploy) - Nova AI troubleshooting and operations

- All your application manifests and configurations

The guide uses AWS for examples. The same architecture works identically on supported cloud providers (AWS and GCP currently, Azure coming soon).

🔄 How do I handle updates and maintenance?

With AstroPulse's intent-based architecture, upgrading is simple: Edit YAML → Apply → Done. Zero downtime, automatic rollback on failures.

Traditional platforms: Multi-step procedures, maintenance windows, complex rollback plans. AstroPulse: Declare your intent in YAML, platform handles the rest.

Example: Upgrade kpack from v0.13.0 to v0.14.0

# apps/core-services/kpack.yaml

# Just change the version - that's your new intent!

spec:

source:

git:

url: https://github.com/buildpacks-community/kpack

ref: v0.14.0 # ⬅️ Changed from v0.13.0 to v0.14.0

$ # Apply your new intent astroctl app apply -f apps/core-services/kpack.yaml # AstroPulse handles everything: # ✅ Downloads new kpack v0.14.0 manifests # ✅ Validates compatibility with your cluster # ✅ Performs rolling update with zero downtime # ✅ Monitors health checks during upgrade # ✅ Auto-rollback if anything fails

Every component follows the same pattern:

- Update version in YAML (your new intent)

- Run

astroctl app apply -f <file> - AstroPulse orchestrates the upgrade safely

Built-in safety: Pre-flight checks, rolling updates, health monitoring, automatic rollback, full audit trail, GitOps ready.

Rollback: git revert HEAD && astroctl app apply -f <file>

Automated maintenance (zero manual intervention):

🤖 Self-Healing Platform

- cert-manager - Auto-renews TLS certificates (starts 90 days before expiry)

- external-dns - Auto-updates DNS records when Ingresses change

- Cluster auto-scaling - Automatically adds/removes nodes based on demand

- Self-healing - Failed pods automatically restart, unhealthy nodes replaced

- kpack - Auto-rebuilds applications when base images are updated (security patches)

- Security tools - If you add Trivy/Kyverno (via Nova), they maintain themselves

Your platform maintains itself. You only intervene when you want to change your intent (add features, upgrade versions, etc.).

Kubernetes upgrades: Coming soon via astroctl clusters upgrade command. For now, manage through AstroPulse console or cluster recreation.

GitOps approach - disaster recovery built-in:

📦 Your Platform is Code

Because everything is YAML in Git:

- Disaster recovery - Rebuild entire platform from Git in 30 minutes

- Multi-environment - Same configs across dev/staging/prod (change profile name only)

- Code review - All changes go through pull requests

- Audit compliance - Full history of who changed what and when

- Automated testing - CI/CD validates configs before merging

Example: Complete data center failure? Spin up new cluster in different region, point to same Git repo, and your entire platform is restored in 30 minutes.

Review my platform upgrade plan from kpack v0.13.0 to v0.14.0 and validate for breaking changes

Nova analyzes upgrade paths, identifies potential issues, and suggests migration steps.

The key insight: With AstroPulse's intent-based approach, you declare what you want (version, configuration, features), and the platform figures out how to get there safely. No manual procedures, no tribal knowledge, no upgrade anxiety.

🚀 Can I white-label this for my customers?

Absolutely! That's one of the three primary use cases.

For a SaaS Product Platform:

- ✅ Brand it - Use your company branding, domain, and logos

- ✅ Monetize it - Charge customers for deployments

- ✅ Customize it - Add your own features and integrations

- ✅ Multi-tenant - Isolate customer workloads with namespaces

- ✅ Billing integration - Track usage per customer

Examples: Render and Fly.io all started this way.

Time to market: 30 min base platform + 1-2 weeks for multi-tenancy and billing integrations.

Conclusion

The key insight from this guide is how simple it is to build production-grade deployment platforms when you have the right foundation and the right co-pilot—bringing Vercel-like developer experience to environments where managed services can't be used.

This guide used AWS as an example, but the exact same architecture works on any cloud provider:

| Component | AWS | GCP | Azure | Self-Hosted |

|---|---|---|---|---|

| Kubernetes | EKS | GKE | AKS | Any K8s |

| DNS | Route53 | Cloud DNS | Azure DNS | Any DNS |

| Container Registry | ECR | Artifact Registry | ACR | Docker Hub, etc. |

| Identity/Auth | IRSA (IAM roles) | Workload Identity | Managed Identity | Service accounts |

What stays the same across ALL providers:

- kpack, cert-manager, external-dns, nginx-ingress

- AstroPulse application management

- Nova AI troubleshooting

- Developer workflow (

.astropulse/pattern)

With AstroPulse + open-source tools, you can build:

🏢 An Internal Developer Platform - Give your 100+ engineering teams self-service deployments. Deploy in 30 minutes, customize in 1-2 days.

💰 A SaaS Product Platform - Build a deployment platform as your product. Core platform in 30 minutes, multi-tenancy in 1-2 weeks.

🔒 An Enterprise Compliance Platform - Meet HIPAA, SOC2, PCI-DSS, FedRAMP requirements. Core platform in 30 minutes, compliance controls in 1-2 weeks.

What you get:

- ✅ Modern developer experience - Git push → automatic builds → live deployment with TLS

- ✅ Complete ownership - Your infrastructure, your branding, your monetization

- ✅ Multi-cloud flexibility - Deploy on AWS, GCP, Azure, or on-premises

- ✅ Cost efficiency - No per-seat fees, 50-80% cost reduction at scale

- ✅ AI-enhanced - Nova AI for troubleshooting and optimization

We've created a complete working example that you can deploy in 30 minutes:

→ paas-platform-example - Full source code with:

- ✅ Automated setup scripts (

setup-platform.sh+deploy-app.sh) - ✅ Production-ready EKS cluster configuration

- ✅ All infrastructure services pre-configured

- ✅ Sample application (latency-app) with complete deployment flow

- ✅ Step-by-step guide

$ git clone {REPO_URL} cd astro-platform-apps/{EXAMPLE_PATH} ./setup-platform.sh --deploy ./deploy-app.sh

Need help? Contact the AstroPulse team.

- AstroPulse Documentation - Deep dive into platform capabilities

- Nova AI Platform Engineering - AI-enhanced infrastructure management

- kpack Documentation - Kubernetes-native builds

- Cloud Native Buildpacks - Buildpacks ecosystem